Memory profilers can hardly be called an “everyday tool.” Typically, developers start thinking about profiling their product closer to its release. This approach may work fine until some last-minute issue like a leak or huge memory traffic crushes all your deadlines. The proactive approach would be to profile your app’s functionality on a daily basis, but who’s got the resources to do that? Well, we think there may be a solution.

If you employ unit testing in your development process, it is likely that you regularly run a number of tests on app logic. Now imagine that you can write some special “memory profiling” tests, e.g. a test that identifies leaks by checking memory for objects of particular type, or a test that tracks memory traffic and fails in case the traffic exceeds some threshold. This is exactly what dotMemory Unit framework allows you to do. The framework is distributed as a NuGet package and can be used to perform the following scenarios:

-

Checking memory for objects of a certain type.

-

Checking memory traffic.

-

Getting the difference between memory snapshots.

-

Saving memory snapshots for further investigation in dotMemory (a standalone .NET memory profiler from JetBrains).

In other words, dotMemory Unit extends your unit testing framework with the functionality of a memory profiler.

IMPORTANT: dotMemory Unit is currently in the EAP (Early Access Program) stage. Please use it for evaluation purposes only!

How It Works

-

dotMemory Unit is distributed as a NuGet package installed to your test project:

PM> Install-Package JetBrains.DotMemoryUnit -pre -

dotMemory Unit requires ReSharper unit test runner. To run tests that use dotMemory Unit, you should have either dotCover 3.1 EAP or ReSharper 9.1 EAP05 installed on your system.

-

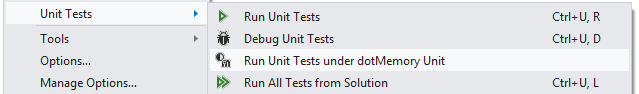

After you install the dotMemory Unit package, ReSharper’s menus for unit tests will include an additional item, Run Unit Tests under dotMemory Unit. In this mode, the test runner will execute dotMemory Unit calls as well as ordinary test logic. If you run a test the ‘normal’ way (without dotMemory Unit support), all dotMemory Unit calls will be ignored.

-

dotMemory Unit works with all of the unit-testing frameworks supported by ReSharper’s unit test runner including MSTest and NUnit.

-

A standalone launcher for integrating with CI systems like JetBrains TeamCity is planned for future releases.

Now let’s take a look at some examples to better understand what dotMemory Unit does.

Example 1: Checking for Specific Objects

Let’s start with something simple. One of the most useful cases can be finding a leak by checking memory for objects of a specific type.

-

A lambda is passed to the

Checkmethod of the staticdotMemoryclass. This method will be called only in case you run the test using Run test under dotMemory Unit. -

The

memoryobject passed to the lambda contains all memory data for the current execution point. -

The

GetObjectsmethod returns a set of objects that match the condition passed in another lambda. This line slices the memory by leaving only objects of theFootype. TheAssertexpression asserts that there should be 0 objects of theFootype.

Note that dotMemory Unit does not force you to use any specificAssertsyntax. Simply use the syntax of the framework your test is written for. For example, the line in the example uses NUnit syntax but could be easily updated for MSTest:

With dotMemory Unit you can select a set of objects by almost any condition, get the number of objects in this set and their size, and use these data in your assertions.

In the following example, we check for objects in the large object heap:

Example 2: Checking Memory Traffic

The test for checking memory traffic is even simpler. All you need do to is mark the test with the AssertTraffic attribute. In the example below, we assert that the amount of memory allocated by all the code in TestMethod1 does not exceed 1,000 bytes.

Example 3: Complex Scenarios for Checking Memory Traffic

If you need to get more complex information about memory traffic (say, check for traffic of objects of a particular type during some specific time interval), you can use a similar approach to the one from the first example. The lambdas passed to the dotMemory.Check method slice and dice traffic data by various conditions.

-

To mark time intervals where memory traffic can be analyzed, use checkpoints created by

dotMemory.Check(as you probably guessed, this method simply takes a memory snapshot). -

The checkpoint that defines the starting point of the interval is passed to the

GetTrafficFrommethod.

For example, this line asserts that the total size of objects implementing theIFoointerface created in the interval betweenmemoryCheckPoint1andmemoryCheckPoint2is less than 1,000 bytes. -

You can get traffic data for any checkpoint that was set earlier. Thus, this line gets traffic between the current

dotMemory.Checkcall andmemoryCheckPoint2.

Example 4: Comparing Snapshots

Like in the ‘standalone’ dotMemory profiler, you can use checkpoints not only to compare traffic but for all kinds of snapshot comparisons. In the example below we assert that no objects from the MyApp namespace survived garbage collection in the interval between memoryCheckPoint1 and the second dotMemory.Check call.

Conclusion

dotMemory Unit is very flexible and allows you to check almost any aspect of app memory usage. Use “memory” tests in the same way as unit tests on app logic:

-

After you manually find an issue (such as a leak), write a memory test that covers it.

-

Write tests for proactive testing – to ensure that new product features do not create any memory issues, like objects left in memory or large traffic.

Thanks for reading and don’t hesitate to try dotMemory Unit EAP on your own! It’s absolutely free, and the only requirement is ReSharper or dotCover installed on your machine.